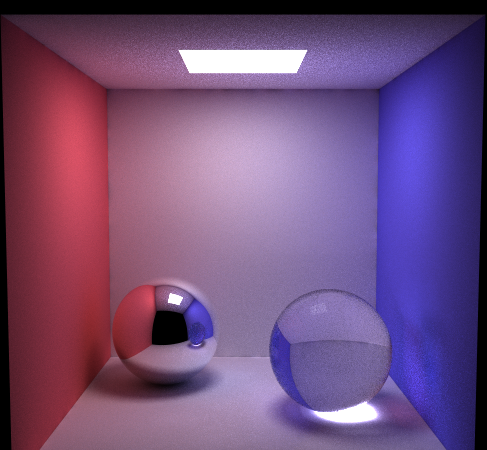

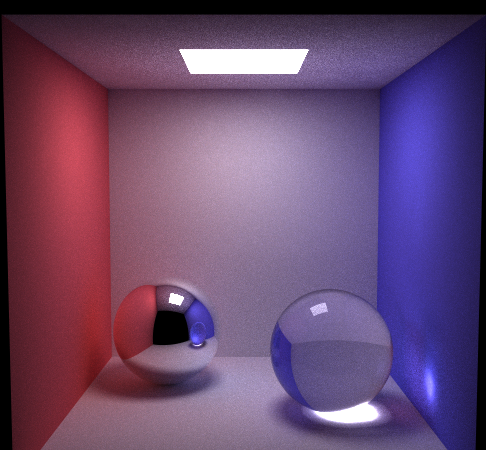

Both images are rendered with 1024 samples per pixel using PathTracer with AmbientOcclusion

The implementation of depth of field is done in the Camera class. Instead of shooting a ray with the camera position as origin, I pick a random sample point from a uniform disk distribution around the camera, normal to the viewing direction

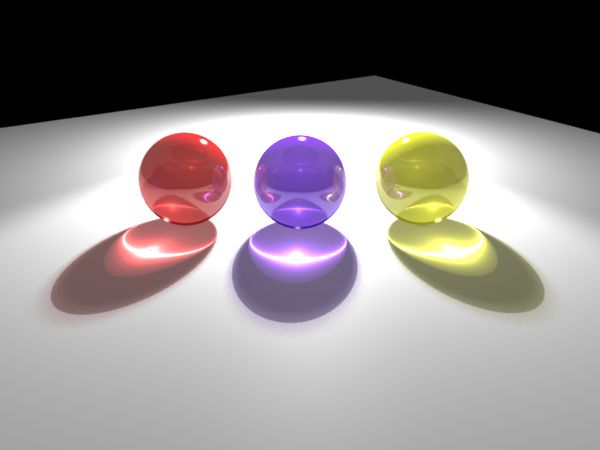

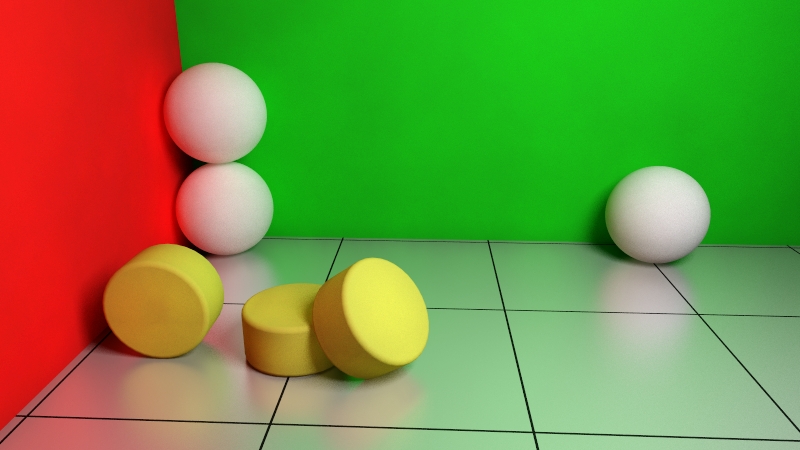

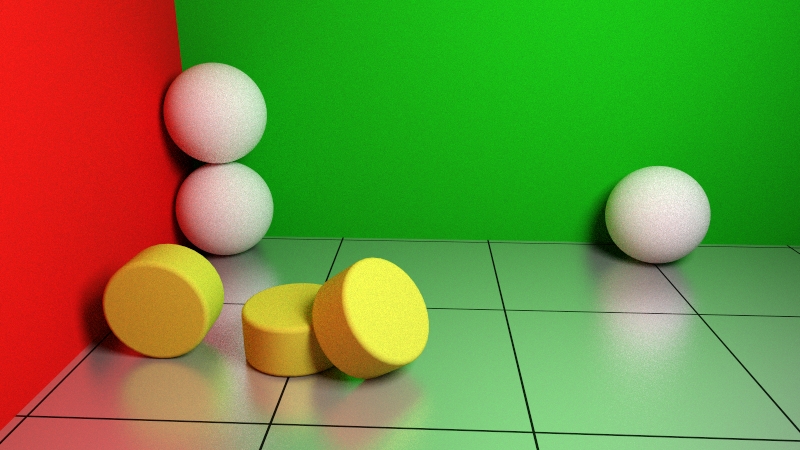

All spheres have the same material. Renderered with PathTracer with Caustic-Photons, 1024 samples.

This feature is implemented in the Renderer Classes (i.e. in FinalRenderer and in FinalPhotonMappingRenderer) in two places. First, when a ray is refracted on a transparent material, the color returned by the next recursion is reduced by the exponential of the extinction times the travelled distance. The second place is similar, but is applied in the photon shooting stage, when a photon hits a dielectric surface.

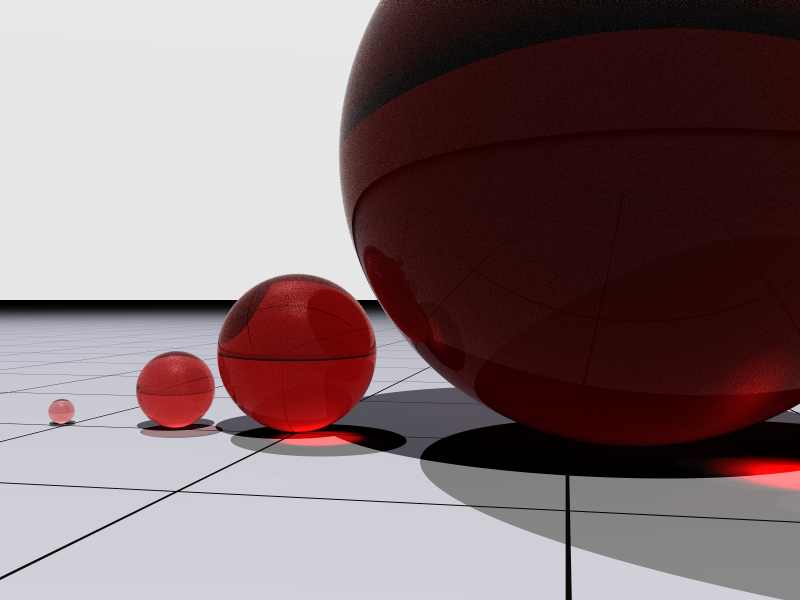

left: no normal interpolation, right: interpolated vertex normals. Path Tracer, 100 samples.

The interpolation of the normals can be found in Mesh::intersectTriangle.

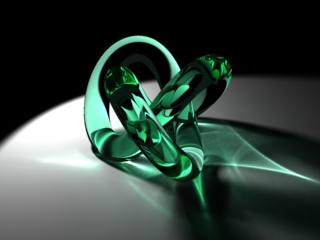

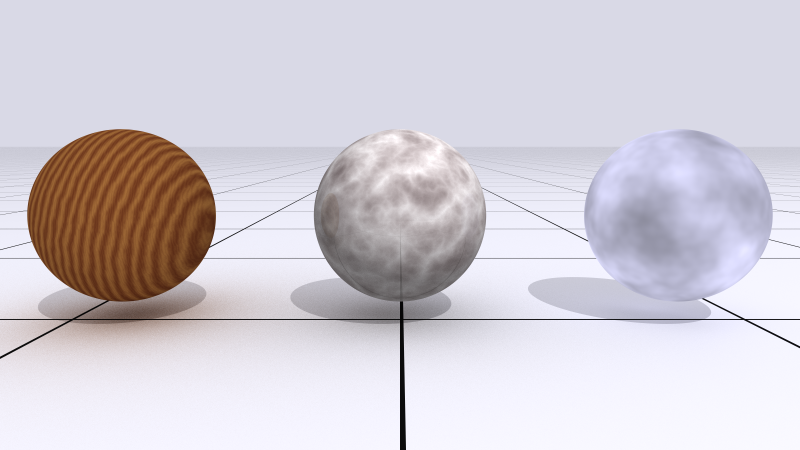

Three different materials using perlin noise: wood, marble and cloudy sky. Renderered with PathTracer, 1024 samples.

I've implemented Perlin noise according his "Improved Noise" paper [link]. To get different kinds of noise, I also implemented two function to generate so called FBm and turbulence. The code is in a seperate file called noise.h and the different materials use it in Shaderfunctions.h. It took quite some time to figure out how to combine different noise values and functions to get the wood and marble material.

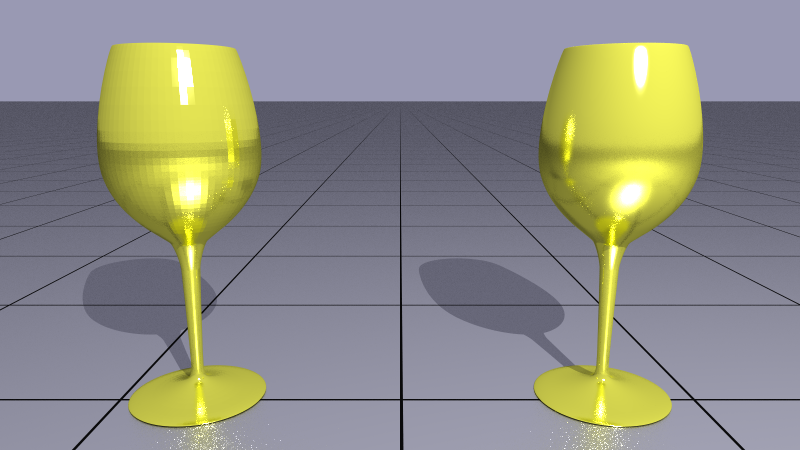

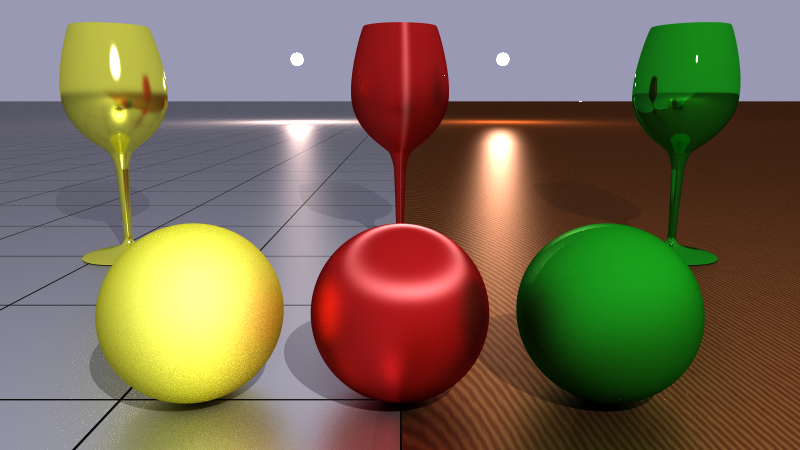

Different lighting models (different parameters used for glasses/spheres): Blinn-Phong, Ward, Cook-Torrance. Floor: left: Blinn-Phong, right: Cook-Torrance. The reflections are only due to importance sampling of the GI rays (left side + middle: phong, right side: Cook-Torrance). Renderered with PathTracer, 256 samples.

Additionally to the Phong model I've implemented three other lighting models: Blinn-Phong, Ward (anisotropic) and Cook-Torrance. For Phong and Cook-Torrance I also implemented importance sampling for indirect rays. In the code I submitted there were two small bugs: in Cook-Torrance there is a missing minus sign in the computation of the reflected ray, at the same place but in Blinn-Phong shader I used the perturbed normal instead of the reflected ray. So for rendering the above image, I corrected these two bugs. The implementation of these different shaders can be found in seperate files named like their name. Creating a random direction according to a Beckmann distribution is implemented in the Warp.h file like all other sampling methods.

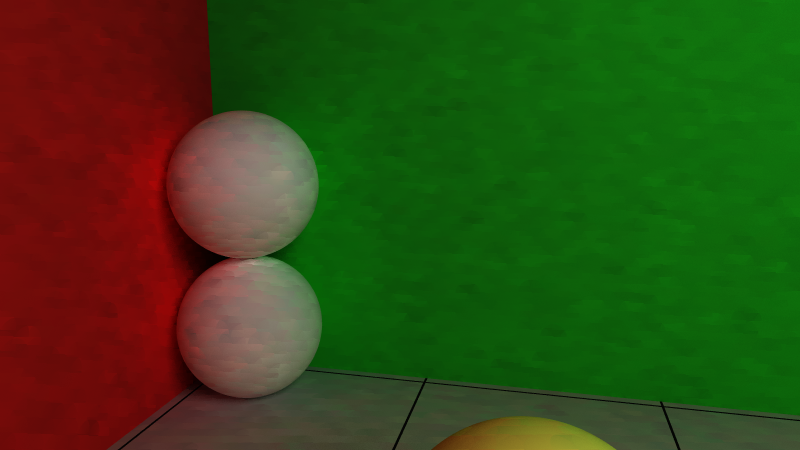

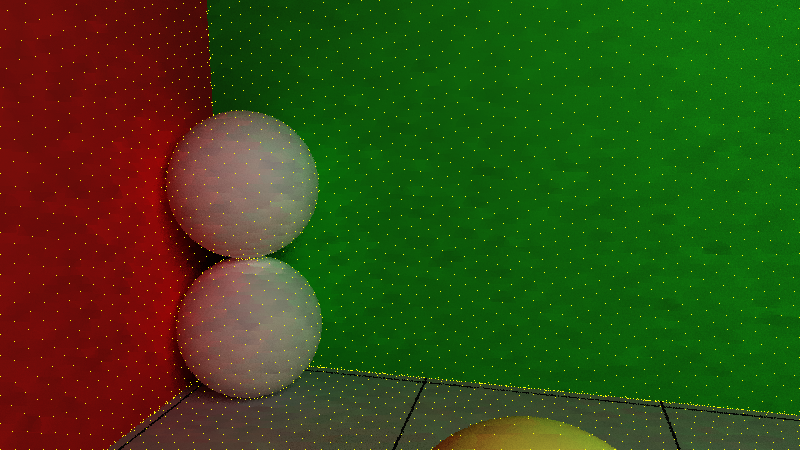

left: PhotonMapping with final gather, right: PathTracing with caustic photons.

left: PhotonMapping with final gather, right: PathTracing. The floor has a Cook-Torrance shader, which shows that importance sampling is working.

The PhotonMapping renderer with final gathering is implemented in the class FinalPhotonMappingRenderer. Unfortunately it seems that the reflection of transparent objects as well as caustics of a reflection is not working correctly, but I don't know if this is an inherent error of the method or if it's a bug in my code. The second example with no transparent objects looks pretty the same as the result of the path tracer. The result is very dependent on the settings for the amount of photons and the radius used for the irradiance estimate.

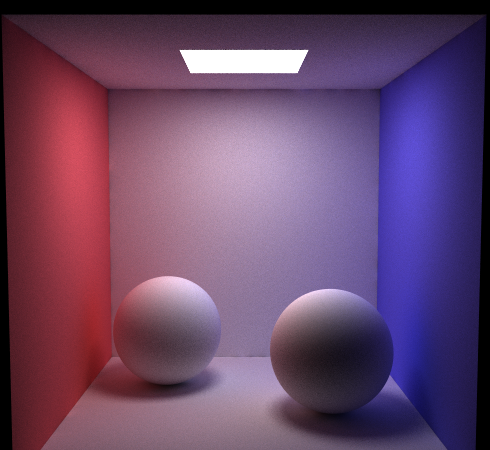

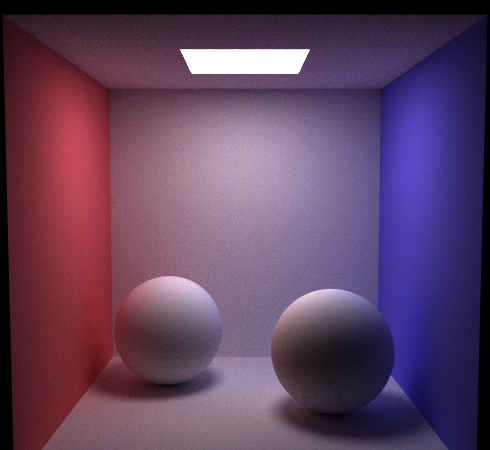

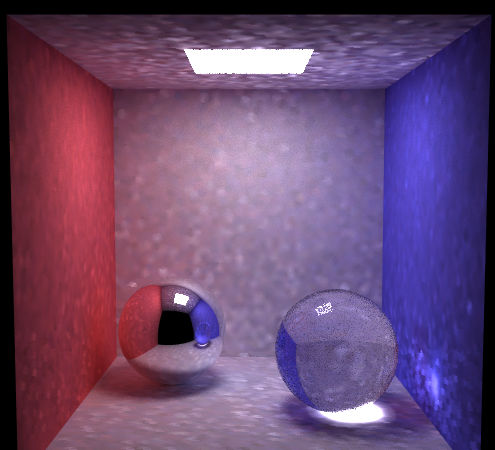

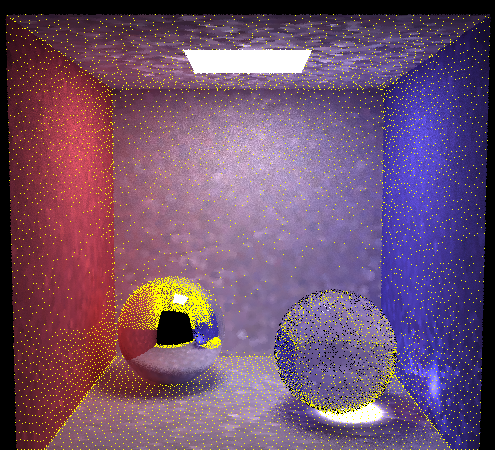

The Cornell box renderer with irradiance caching and caustic photons, right: showing the pixels that computed the cache values.

left: GI using irradiance caching with 16 samples, right: showing the pixels that computed the cache values.

I implemented irradiance caching with a small improvement of using angular gradients. Since the irradiance cache doesn't handle concurrent access, multithreading has to be turned of for rendering with the irradiance cache. Unfortunately I didn't have time to also include translational gradients, therefore, the rendering is very "blochy" with a reasonable rendering time, but the overall illumination looks ok, compared to the path tracing solution above. For the storage of the irradiance values I use a linear list, so the speed could be significantly improved when using a tree instead of the list. The cache has one list of values for each bouncing level, i.e. number of diffuse bounces. For determining the irradiance at a surface point I shoot 100 rays (but for each recursion level this is reduced in a quadratic manner). The implementation can be found at two places, first in the file IrradianceCache and second in the FinalRenderer::trace when a diffuse surface is hit.

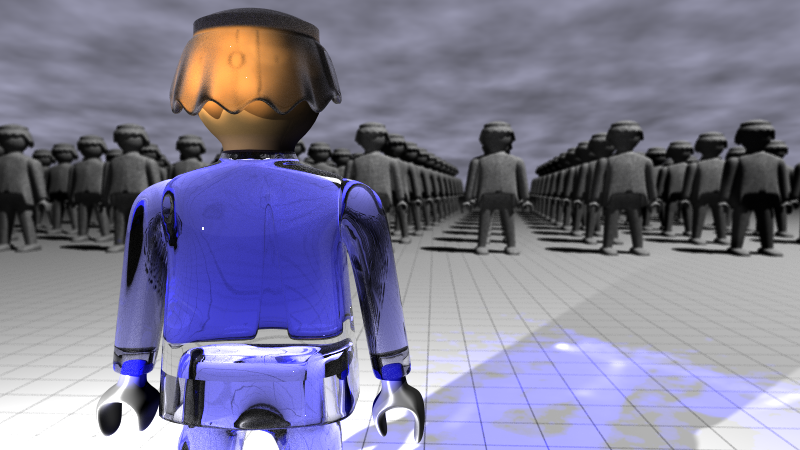

For the final image I used the Photon Mapping Renderer with Final Gather for GI and most of the above features are present in the image, e.g. anisotropic Ward Shader for the hands, Perlin Noise for the sky and the stone material of the group of characters and frosted glass for the hair.